The Hardware Platform Interface (HPI) is an open specification that defines an application programming interface (API) for platform management of computer systems. The API supports tasks including reading temperature or voltage sensors built into a processor, configuring hardware registers, accessing system inventory information like model numbers and serial numbers, and performing more complex activities, such as upgrading system firmware or diagnosing system failures.

HPI is designed for use with fault-tolerant and modular high-availability computer systems, which typically include automatic fault detection features and hardware redundancy so that they can provide continuous Service Availability. Additional features common in hardware platforms used for high-availability applications include online serviceability and upgradeability via hot-swappable modules.

The HPI specification is developed and published by the Service Availability Forum (SA Forum) and made freely available to the public.

History

A primary motivator for the development of the HPI specification was the emergence of modular computer hardware platforms and commercial off the shelf (COTS) systems in the late 1990s and early 2000s. This included CompactPCI platforms and, later, the AdvancedTCA and MicroTCA(xTCA) platforms standardized by the PCI Industrial Computer Manufacturers Group (PICMG). These platforms include hardware management infrastructures based on the Intelligent Platform Management Interface (IPMI). Concurrently, major Enterprise vendors such as HP and IBM also developed modular and bladed systems.

The need for the HPI specification was first identified by an industry group called the “High Availability Forum,” which met for several months in 2000 to discuss issues relating to building high-availability computer systems using open architecture technology. This group published a white paper, “Providing Open Architecture High Availability Solutions” in early 2001. Growing out of that work, Intel Corporation began a project to define a standard hardware platform management API named the Universal Chassis Management Interface (UCMI). This work was migrated to the newly formed SA Forum consortium and was published as the Hardware Platform Interface in October 2002. The original HPI specification, SAI-HPI-A.01.01, was the first specification published by the SA Forum.

From 2002 onwards, several updates to the HPI specification have been published. Additionally, specifications for accessing an HPI implementation via Simple Network Management Protocol(SNMP) and specifications describing the use of HPI on AdvancedTCA and MicroTCA platforms have been produced. Table 1 lists all specifications published by the SA Forum in the HPI family.

| Specification Label | Date of Publication | Notes |

|---|---|---|

| SAI-HPI-A.01.01 | October 7, 2002 | Original HPI specification |

| SAI-HPI-B.01.01 | May 3, 2004 | Major revision to the base HPI specification. Addressed implementation and usability issues in original specification |

| SAI-HPI-SNMP-B.01.01 | May 3, 2004 | SNMP MIB for accessing HPI implementations |

| SAI-HPI-B.02.01 | January 18, 2006 | Minor revision to the base HPI specification. Added FUMI, DIMI and Load Management capability. |

| SAIM-HPI-B.01.01-ATCA | January 18, 2006 | HPI to AdvancedTCA mapping specification |

| SAI-HPI-B.03.01 | October 21, 2008 | Minor revision to the base HPI specification. Enhancements to FUMI; some new API functions |

| SAI-HPI-B.03.02 | November 20, 2009 | Minor corrections to the base HPI specification |

| SAIM-HPI-B.03.02-xTCA | February 19, 2010 | Major revision to the AdvancedTCA mapping specification. Includes mapping for MicroTCA platforms as well as AdvancedTCA. |

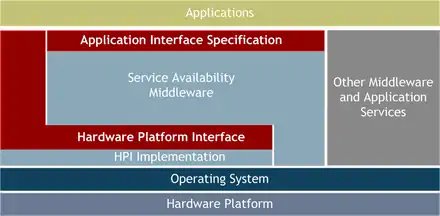

The HPI specifications and the Application Interface Specification (AIS) have been developed separately within the SA Forum. Although they are both intended to address functionality required for the highest levels of Service Availability, they are usable independently of each other. The AIS specifications can be implemented and used for high-availability clustering middleware that does not implement hardware platform management, and the HPI specification can be implemented by platform providers and used directly by application or management programs without the use of other SA Forum management middleware.

The primary intersection between the AIS and HPI specifications is found in the AIS Platform Management Service (PLM). The PLM service is defined with an expectation that hardware platform management will be provided via an implementation of the HPI specification on the target hardware platform.

HPI architecture

The HPI specification does not dictate or assume which platform management capabilities should be present in a hardware platform. Rather, it provides a generic and consistent way to model whatever capabilities are present and provides a way for user application programs to learn the details of the platform management capabilities that are available.

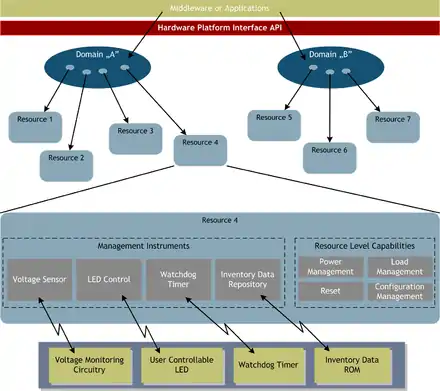

HPI organizes hardware platform management capabilities into a set of Resources. Each Resource hosts a set of Management Instruments that can monitor and control parts of the hardware platform. The Management Instruments abstract management components built into the platform, like temperature or voltage sensors, configuration registers and display elements, or provide interfaces to management functions, such as upgrading firmware and running diagnostics. These Management Instruments are described in Resource Data Records (RDRs) that are accessible by the user application, so the application can discover the configuration and capabilities of each Resource.

While HPI Resources are abstract structures, typically, they are used to model the management capabilities of individual management controllers in the hardware platform. For example, in AdvancedTCA (ATCA) platforms, each computing blade usually includes an IPMI Management Controller (IPMC) responsible for hardware management tasks related to that blade. An HPI interface for an ATCA platform will normally include a Resource for each IPMC.

Resources in HPI are organized into Domains. Often, an HPI implementation will implement only one Domain for all Resources, but it is possible to subdivide the system into multiple Domains, if needed. For example, in some modular systems, various modules may be owned and managed by different users. To support this with HPI, all the Resources used to manage the modules owned by a specific user may be placed in a single Domain, and that user is given access only to that Domain.

HPI user programs access the platform management infrastructure by opening a Session with a specific HPI Domain. With this Session established, the user program may then make various HPI function calls to query or update information about that Domain, or about any of the Resources that are currently members of that Domain.

While HPI Management Instruments are organized and addressed by Domain and Resource, the hardware components that are managed by those Management Instruments are identified individually in the RDRs associated with each Management Instrument. Physical hardware components in HPI are called Entities and are identified with an Entity Path. An Entity Path contains multiple elements, with the first element describing where the hardware Entity is located in a containing Entity, the second element describing where that Entity is located in a larger container, and so on. For example, a redundant power supply for a chassis in a system that spans multiple racks might have the entity path of POWER_SUPPLY.2,SUBRACK.3,RACK.1.

Because each Management Instrument is associated with a specific Entity Path, it is possible for one HPI Resource to handle platform management for more than one Entity. It is also possible for a single Entity to be managed via multiple HPI Resources. This possibility of an arbitrary mix-and-match between HPI Resources and the hardware Entities being managed can seem confusing, but it is an important feature of the HPI architecture. This is because it allows modeling of complex management infrastructures that may include both in-band and out-of-band management elements of a single hardware Entity, and systems where a management controller on one piece of equipment provides management for another piece of equipment.

Management Instruments

HPI Resources may host a set of Management Instruments. Each Management Instrument models the ability to monitor or control some aspect of a hardware Entity. A set of RDRs in each Resource describes the Management Instruments hosted by that Resource, including information on what is being monitored or controlled.

There are seven types of Management Instruments that may be used to model various capabilities of the platform management infrastructure. The first four: Sensors, Controls, Inventory Data Repositories and Watchdog Timers, are basic Management Instruments that usually map to discrete platform management capabilities. The other three: Annunciators, DIMIs and FUMIs, are more complex and encapsulate logical functions that the platform management infrastructure can provide.

Sensors

Sensors are used to model the capability to monitor some aspect of an Entity. HPI Sensors are modeled closely on IPMI sensors.

An HPI sensor reports status information about the hardware being monitored through a set of up to 15 individual bits, called Event States. Each Event State can be individually asserted or deasserted, and when an Event State changes, asynchronous events can be generated to report this to an HPI user. The interpretation of each Event State can vary according to a defined Sensor Category (e.g., threshold, performance, presence, severity), or can be unique to a specific Sensor. Sensors in the threshold category have additional capabilities. Threshold sensors report when a value being monitored is above or below configurable threshold values. Up to three upper thresholds and three lower thresholds may be defined for Minor, Major and Critical deviations from the norm in either direction.

In addition to reporting the status of the monitored hardware via Event States, an HPI Sensor can also report a value, called the Sensor Reading. The Sensor Reading reflects the current value of whatever is being monitored, scaled in the appropriate units. Sensor Readings may be integer values, floating point values or a block of up to 32 bytes of arbitrary data.

Controls

Controls are used to model the capability to update some aspect of an Entity. There are several types of Controls defined in HPI, which vary according to the type of data that can be used when they are updated. Digital controls can be turned on or off, or pulsed on or off. Analog and Discrete controls can be set to a 32-bit value. Stream and Text controls can be given larger amounts of data to control the blinking of an LED, sounding of a beeper or display of data on a control panel. OEM (vendor specific) controls can be sent a block of data, which may be used in implementation-specific ways by the managed Entity.

Inventory Data Repositories (IDR)

Inventory Data Repositories are used to report or set identification and configuration information for hardware Entities. Typically, items like model number, serial number and basic configuration data are stored in ROM or flash memory on a hardware entity. This information can be read, and in some cases updated, via an HPI Inventory Data Repository.

Watchdog Timers

Watchdog Timers are devices that are often implemented with special hardware in high availability systems. These devices are set to automatically interrupt, reset or power cycle an Entity after a certain period of time if it is not programmatically reset first. The purpose of a watchdog timer device is to provide a fault-detection mechanism. The HPI Watchdog Timer Management Instrument is designed to interface with this sort of hardware mechanism. It is modeled very closely on the IPMI watchdog timer.

Annunciators

Annunciators are logical Management Instruments that are used to interface with an alarm display function on a hardware platform. Because a wide variety of alarm display hardware, such as LEDs, audible alerts, text display panels, etc. are used on different hardware platforms, it is difficult for an application program to be written to display alarm information in a platform-independent way. The HPI Annunciator Management Instrument provides an abstract interface to communicate alarm information to the HPI implementation or underlying management infrastructure, which can then take the appropriate actions to display that information on a particular platform.

Diagnostic Initiator Management Instruments (DIMIs)

DIMIs are logical management instruments used to coordinate the running of on-line or off-line diagnostic firmware or software on various hardware entities. A DIMI provides information to the HPI user program that indicates what will be the service impact of running diagnostics, and provides a common interface to start, stop and monitor the running of the diagnostic programs. This function is integrated with HPI to help standardize automatic diagnosis and repair of fault conditions and to support on-line serviceability.

Firmware Upgrade Management Instruments (FUMIs)

FUMIs are logical management instruments that are used to support the installation of firmware updates to programmable hardware Entities. For hardware Entities that include field-upgradeable firmware, the FUMI provides information on the currently installed firmware version(s), and provides a standard interface for identifying a new version to load, and to coordinate the upgrade process, including possible backup, and rollback to previous versions, if required.

Resource-level capabilities

In addition to a set of Management Instruments as described above, an HPI Resource may also provide up to four additional management capabilities. These Resource-level capabilities are essentially special Management Instruments, of which there may be at most one of each type supported by a Resource. Whether or not a particular Resource provides these miscellaneous capabilities and to which Entity they apply are described in a data record accessible by the HPI user for the Resource. A single Entity Path is defined in that record, so any of these capabilities, if present, will apply to the same Entity.

- The resource-level Power Management capability acts as a specialized Control to turn power on or off the designated Entity.

- The resource-level Reset capability acts as a specialized Control to cause a hard or soft reset operation on the designated Entity, or if supported, to hold the reset signal in an asserted state to prevent the Entity from operating.

- The resource-level Load Management capability acts as a specialized Control that interfaces with the bootstrap program of the designated Entity to identify which operating system or other software should be loaded when a bootstrap operation is performed.

- The Resource-level Configuration Management capability provides a method for an HPI user to direct the Resource to save or restore configuration information, such as sensor threshold levels to or from a persistent storage medium.

Domain functions

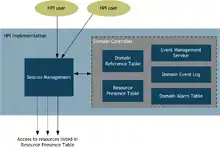

User programs access HPI-based platform management by opening a Session with a Domain. The user program may open a Session with a specific Domain by specifying a Domain Identifier, or more commonly, it may open a Session with a default Domain. With a Session established, the user program may access various Domain-level functions, or it may access any of the Resources that are currently listed as members of the Domain. Because a Session will only allow access to Resources that are currently members of the Domain, user access control can be enforced by an HPI implementation by limiting which Resources are members of each Domain, and limiting which users are allowed to establish Sessions with those Domains.

One of the most important functions of the Domain is providing information, via the Resource Presence Table (RPT), about all the Resources that are members of the Domain. A second table, the Domain Reference Table (DRT) provides information about other HPI Domains that may be accessed by opening additional Sessions.

The HPI interface provides three services at the Domain level that a user program can use to stay informed of exceptional conditions in the hardware platform. The most important of these is the Event Management Service. A user may request events be forwarded from the Domain on any open Session. When significant events occur to the hardware Entities monitored by any of the Resources that are members of the Domain, Event messages are generated and queued to all open Sessions that have made such a request. Through this mechanism, user programs can stay informed of changes in the managed platform without needing to continually poll for status. Events may also be stored in the Domain Event Log and retrieved at a later time for historical analysis. Finally, the Domain Alarm Table is accessible by the user program and reports on current alarm conditions present in any of the Resources that are members of the Domain.

Hot-swap management

A key feature of the HPI specification is the way it handles dynamic reconfiguration, or hot-swap actions in the managed platform. Hot-swap refers to the ability to add or remove hardware components in a running platform. HPI terms a hardware Entity that can be hot-swapped as a Field Replaceable Unit, or FRU. Often, especially in system architectures like AdvancedTCA, FRUs include their own platform management controllers. Thus, hot-swapping a FRU can simultaneously modify both the set of hardware Entities to be managed and the infrastructure available for that management.

The HPI approach to hot-swap management reflects this by modeling the addition or removal of a hardware Entity by adding or removing a Resource in a Domain. If the FRU does not include its own management controller, the Resource may not have any management capabilities assigned to it, but it is still used to report the presence of the FRU in the system. On the other hand, if the FRU does include a management controller, then the Resource that is added to the Domain can host new Management Instruments or other capabilities and make them available to the HPI user.

The Resource associated with a FRU will always be in one of five Hot-swap States, which are readable by the HPI user: Not Present, Inactive, Insertion Pending, Active, Extraction Pending. The Not Present state is never actually reported by a Resource, because when the FRU is not present in the system, the Resource should not exist as a member of any Domain. The other four states are applicable for FRUs that are physically present in the system, whether or not they are fully operational. When a Resource changes to a new Hot-swap State, an HPI event is sent to user programs that have requested event notifications.

HPI Resources that model hot-swappable FRUs may be configured to support either Unmanaged Hot-swap or Managed Hot-swap. A Resource that supports Unmanaged Hot-swap will report its current Hot-swap State, but the user has no control over the Hot-swap operations of the FRU. When a Resource supports Managed Hot-swap, then a user program can interact with the HPI implementation and the underlying platform management infrastructure to coordinate the actions required to integrate newly added FRUs or to deactivate FRUs being removed from the system.

Backward compatibility

It is a goal of the SA Forum that new versions of its specifications be kept backward compatible with previous versions. In the case of the HPI specification, this means that user programs written to work with HPI implementations of a certain version should continue to work without change with HPI implementations that support a later version of the specification. This goal has been met with HPI specifications published since the SAI-HPI-B.01.01 specification. The “B” series of HPI specifications are not backward compatible with the SAI-HPI-A.01.01 specification.

To achieve backward compatibility of HPI specifications, several strategies are employed:

a) Functions defined in earlier versions of the HPI specification are included in later versions, with no change to the function prototype. Obsolete functions are retained, but advice is included in the specification that they should not be used by new user programs.

b) New functions may be added in new versions of the HPI specification, as long as their use is not required by existing programs.

c) Various enumerations that report data like hardware Entity types, Sensor types, etc. are declared in the HPI specification as being open-ended. The list of error return codes that HPI functions may return is also declared as open-ended. New versions of the HPI specification do not remove or change any existing enumerated values, but may add new values to an open-ended enumeration. User programs should accept values that are not currently defined and treat them as “valid but undefined.” By doing so, the program can continue to work when it is used with an implementation that is built to a newer version of the HPI specification, which may have defined new values for the enumeration.

d) Data structures passed from HPI functions to the user may not grow in length in new versions of the HPI specification or change the format of the data that was defined in previous versions. However, previously undefined bits in bit-fields may be defined in new versions of the HPI specification, and unused space in unions may be used, as long as programs that do not recognize the new bits or new use of unused space will continue to operate correctly.

e) Data structures passed to HPI functions from the user may change in new versions of the HPI specification, as long as the change is made in a way such that an existing program passing the earlier defined structure will continue to operate correctly.

HPI to xTCA Mapping Specification

Because HPI is widely used on AdvancedTCA systems, the SA Forum published a Mapping Specification, labeled SAIM-HPI-B.01.01-ATCA in January 2006. The purpose of this specification is to provide guidance to implementers of HPI management interfaces on a recommended way to model this complex system architecture with HPI. In February 2010 a new mapping specification, SAIM-HPI-B.03.02-xTCA was published that revises this mapping and extends it to MicroTCA systems.

The HPI to xTCA mapping specification defines a way to represent the manageability of an xTCA platform in HPI in a single HPI Domain. Entity Path naming of xTCA system components is specified, and Management Instruments are defined that reflect the platform management information and control functions available in these platforms.

The mapping specification also defines Resources for the xTCA chassis, shelf manager, carrier manager and other FRUs. In the original version of the specification, Resources were defined and required for all “Slots” in the chassis or on carrier cards that could potentially host FRUs. In the update published in 2010, these Slot resources were made optional.

The HPI to xTCA Mapping Specification serves two audiences. The first consists of platform developers who want to incorporate an HPI interface into an AdvancedTCA or MicroTCA platform. The specification provides a template for modeling the systems.

The second audience consists of HPI users that wish to create portable application or middleware programs across multiple AdvancedTCA or MicroTCA platforms. However, HPI users that wish to provide portable programs for both xTCA and other hardware platform architectures do not necessarily need to reference the HPI to xTCA Mapping Specification. This is because HPI implementations that follow the HPI to xTCA Mapping Specification will present basic platform management capabilities in a way that is discoverable and usable via the standard HPI interface. Some platform management capabilities that are unique to xTCA platforms are not usable without referencing the Mapping Specification, but these may be reasonably ignored by most general purpose HPI user applications.

HPI implementations

Several widely deployed implementations of the HPI specification have been produced, most notably by platform vendors that build AdvancedTCA computer systems or other high-availability computer platforms. In most implementations the HPI Application Program Interface itself is provided through a library that is linked to application programs. This library module typically communicates to an HPI Server running as a daemon process, which performs the functions of the HPI Domains and Resources, communicating with an underlying management infrastructure as required.

Several HPI implementations are based on an open-source implementation of the HPI specification, called OpenHPI. OpenHPI also follows the general design shown in Figure 6, in that it includes a library module that links with application programs and a daemon module to which the library modules communicate. The OpenHPI daemon process is designed to integrate with one or more plug-in modules, which handle the downstream communication with various platform management infrastructures.

The SA Forum Implementation Registry is a process that enables implementations of the SA Forum specifications to be registered and made publicly available. Membership is not required to register implementations. Implementations that have been successfully registered may be referred to as “Service Availability Forum Registered.”