Photogrammetry is the science and technology of obtaining reliable information about physical objects and the environment through the process of recording, measuring and interpreting photographic images and patterns of electromagnetic radiant imagery and other phenomena.[1]

%252C_N.ELAC.jpg.webp)

The term photogrammetry was coined by the Prussian architect Albrecht Meydenbauer,[2] which appeared in his 1867 article "Die Photometrographie."[3]

%252C_N.ELAC.jpg.webp)

There are many variants of photogrammetry. One example is the extraction of three-dimensional measurements from two-dimensional data (i.e. images); for example, the distance between two points that lie on a plane parallel to the photographic image plane can be determined by measuring their distance on the image, if the scale of the image is known. Another is the extraction of accurate color ranges and values representing such quantities as albedo, specular reflection, metallicity, or ambient occlusion from photographs of materials for the purposes of physically based rendering.

Close-range photogrammetry refers to the collection of photography from a lesser distance than traditional aerial (or orbital) photogrammetry. Photogrammetric analysis may be applied to one photograph, or may use high-speed photography and remote sensing to detect, measure and record complex 2D and 3D motion fields by feeding measurements and imagery analysis into computational models in an attempt to successively estimate, with increasing accuracy, the actual, 3D relative motions.

From its beginning with the stereoplotters used to plot contour lines on topographic maps, it now has a very wide range of uses such as sonar, radar, and lidar.

Methods

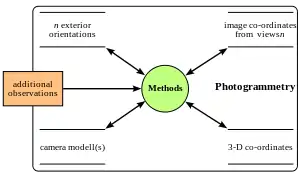

Photogrammetry uses methods from many disciplines, including optics and projective geometry. Digital image capturing and photogrammetric processing includes several well defined stages, which allow the generation of 2D or 3D digital models of the object as an end product.[5] The data model on the right shows what type of information can go into and come out of photogrammetric methods.

The 3D coordinates define the locations of object points in the 3D space. The image coordinates define the locations of the object points' images on the film or an electronic imaging device. The exterior orientation[6] of a camera defines its location in space and its view direction. The inner orientation defines the geometric parameters of the imaging process. This is primarily the focal length of the lens, but can also include the description of lens distortions. Further additional observations play an important role: With scale bars, basically a known distance of two points in space, or known fix points, the connection to the basic measuring units is created.

Each of the four main variables can be an input or an output of a photogrammetric method.

Algorithms for photogrammetry typically attempt to minimize the sum of the squares of errors over the coordinates and relative displacements of the reference points. This minimization is known as bundle adjustment and is often performed using the Levenberg–Marquardt algorithm.

Stereophotogrammetry

A special case, called stereophotogrammetry, involves estimating the three-dimensional coordinates of points on an object employing measurements made in two or more photographic images taken from different positions (see stereoscopy). Common points are identified on each image. A line of sight (or ray) can be constructed from the camera location to the point on the object. It is the intersection of these rays (triangulation) that determines the three-dimensional location of the point. More sophisticated algorithms can exploit other information about the scene that is known a priori, for example symmetries, in some cases allowing reconstructions of 3D coordinates from only one camera position. Stereophotogrammetry is emerging as a robust non-contacting measurement technique to determine dynamic characteristics and mode shapes of non-rotating[7][8] and rotating structures.[9][10] The collection of images for the purpose of creating photogrammetric models can be called more properly, polyoscopy, after Pierre Seguin [11]

Integration

Photogrammetric data can be complemented with range data from other techniques. Photogrammetry is more accurate in the x and y direction while range data are generally more accurate in the z direction . This range data can be supplied by techniques like LiDAR, laser scanners (using time of flight, triangulation or interferometry), white-light digitizers and any other technique that scans an area and returns x, y, z coordinates for multiple discrete points (commonly called "point clouds"). Photos can clearly define the edges of buildings when the point cloud footprint can not. It is beneficial to incorporate the advantages of both systems and integrate them to create a better product.

A 3D visualization can be created by georeferencing the aerial photos[12][13] and LiDAR data in the same reference frame, orthorectifying the aerial photos, and then draping the orthorectified images on top of the LiDAR grid. It is also possible to create digital terrain models and thus 3D visualisations using pairs (or multiples) of aerial photographs or satellite (e.g. SPOT satellite imagery). Techniques such as adaptive least squares stereo matching are then used to produce a dense array of correspondences which are transformed through a camera model to produce a dense array of x, y, z data which can be used to produce digital terrain model and orthoimage products. Systems which use these techniques, e.g. the ITG system, were developed in the 1980s and 1990s but have since been supplanted by LiDAR and radar-based approaches, although these techniques may still be useful in deriving elevation models from old aerial photographs or satellite images.

Applications

Photogrammetry is used in fields such as topographic mapping, architecture, filmmaking, engineering, manufacturing, quality control, police investigation, cultural heritage, and geology. Archaeologists use it to quickly produce plans of large or complex sites, and meteorologists use it to determine the wind speed of tornadoes when objective weather data cannot be obtained.

It is also used to combine live action with computer-generated imagery in movies post-production; The Matrix is a good example of the use of photogrammetry in film (details are given in the DVD extras). Photogrammetry was used extensively to create photorealistic environmental assets for video games including The Vanishing of Ethan Carter as well as EA DICE's Star Wars Battlefront.[14] The main character of the game Hellblade: Senua's Sacrifice was derived from photogrammetric motion-capture models taken of actress Melina Juergens.[15]

Photogrammetry is also commonly employed in collision engineering, especially with automobiles. When litigation for a collision occurs and engineers need to determine the exact deformation present in the vehicle, it is common for several years to have passed and the only evidence that remains is crash scene photographs taken by the police. Photogrammetry is used to determine how much the car in question was deformed, which relates to the amount of energy required to produce that deformation. The energy can then be used to determine important information about the crash (such as the velocity at time of impact).

Mapping

Photomapping is the process of making a map with "cartographic enhancements"[16] that have been drawn from a photomosaic[17] that is "a composite photographic image of the ground," or more precisely, as a controlled photomosaic where "individual photographs are rectified for tilt and brought to a common scale (at least at certain control points)."

Rectification of imagery is generally achieved by "fitting the projected images of each photograph to a set of four control points whose positions have been derived from an existing map or from ground measurements. When these rectified, scaled photographs are positioned on a grid of control points, a good correspondence can be achieved between them through skillful trimming and fitting and the use of the areas around the principal point where the relief displacements (which cannot be removed) are at a minimum."[16]

"It is quite reasonable to conclude that some form of photomap will become the standard general map of the future."[18] They go on to suggest that, "photomapping would appear to be the only way to take reasonable advantage" of future data sources like high altitude aircraft and satellite imagery.

Archaeology

Demonstrating the link between orthophotomapping and archaeology,[19] historic airphotos photos were used to aid in developing a reconstruction of the Ventura mission that guided excavations of the structure's walls.

Overhead photography has been widely applied for mapping surface remains and excavation exposures at archaeological sites. Suggested platforms for capturing these photographs has included: War Balloons from World War I;[20] rubber meteorological balloons;[21] kites;[21][22] wooden platforms, metal frameworks, constructed over an excavation exposure;[21] ladders both alone and held together with poles or planks; three legged ladders; single and multi-section poles;[23][24] bipods;[25][26][27][28] tripods;[29] tetrapods,[30][31] and aerial bucket trucks ("cherry pickers").[32]

Handheld, near-nadir, overhead digital photographs have been used with geographic information systems (GIS) to record excavation exposures.[33][34][35][36][37]

Photogrammetry is increasingly being used in maritime archaeology because of the relative ease of mapping sites compared to traditional methods, allowing the creation of 3D maps which can be rendered in virtual reality.[38]

3D modeling

A somewhat similar application is the scanning of objects to automatically make 3D models of them. Since photogrammetry relies on images, there are physical limitations when those images are of an object that has dark, shiny or clear surfaces. In those cases, the produced model often still contains gaps, so additional cleanup with software like MeshLab, netfabb or MeshMixer is often still necessary.[39] Alternatively, spray painting such objects with matte finish can remove any transparent or shiny qualities.

Google Earth uses photogrammetry to create 3D imagery.[40]

There is also a project called Rekrei that uses photogrammetry to make 3D models of lost/stolen/broken artifacts that are then posted online.

Software

There exist many software packages for photogrammetry; see comparison of photogrammetry software.

Apple introduced a photogrammetry API called Object Capture for macOS Monterey at the 2021 Apple Worldwide Developers Conference.[41] In order to use the API, a MacBook running macOS Monterey and a set of captured digital images are required.[42]

See also

- Aimé Laussedat – French cartographer and photographer, "father of photogrammetry"

- 3D data acquisition and object reconstruction – Scanning of an object or environment to collect data on its shape

- 3D reconstruction from multiple images – Creation of a 3D model from a set of images

- Aerial survey – Method of collecting geophysical data from high altitude aircraft

- American Society for Photogrammetry and Remote Sensing

- Collinearity equation – Two equations relating 2D sensor plane coordinates to 3D object coordinates

- Computer vision – Computerized information extraction from images

- Digital image correlation and tracking

- Edouard Deville – French surveyor (1849-1924)

- Epipolar geometry – Geometry of stereo vision

- Geoinformatics – Application of information science methods in geography, cartography, and geosciences

- Geomatics engineering – branch of engineering

- Geographic information system – System to capture, manage and present geographic data

- International Society for Photogrammetry and Remote Sensing – international non-governmental organization

- Mobile mapping – process of collecting geospatial data from a mobile vehicle

- National Collection of Aerial Photography – one of the largest collections of aerial imagery in the world, featuring historic events and places

- Neural radiance field

- Periscope – Instrument for observation from a concealed position

- Photoclinometry

- Photo interpretation

- Rangefinder – Device used to measure distances to remote objects

- Remote Sensing and Photogrammetry Society – British learned society

- Stereoplotter

- Simultaneous localization and mapping – Computational navigational technique used by robots and autonomous vehicles

- Structure from motion – Method of 3D reconstruction from moving objects

- Surveying – Science of determining the positions of points and the distances and angles between them

- Unmanned aerial photogrammetric survey – using UAVs to take aerial photos

- Videogrammetry – Measurement technology

References

- ↑ ASPRS online Archived May 20, 2015, at the Wayback Machine

- ↑ "Photogrammetry and Remote Sensing" (PDF). Archived from the original (PDF) on 2017-08-30.

- ↑ Albrecht Meydenbauer: Die Photometrographie. In: Wochenblatt des Architektenvereins zu Berlin Jg. 1, 1867, Nr. 14, S. 125–126 (Digitalisat); Nr. 15, S. 139–140 (Digitalisat); Nr. 16, S. 149–150 (Digitalisat).

- ↑ Wiora, Georg (2001). Optische 3D-Messtechnik : Präzise Gestaltvermessung mit einem erweiterten Streifenprojektionsverfahren (Doctoral dissertation). (Optical 3D-Metrology : Precise Shape Measurement with an extended Fringe Projection Method) (in German). Heidelberg: Ruprechts-Karls-Universität. p. 36. Retrieved 20 October 2017.

- ↑ Sužiedelytė-Visockienė J, Bagdžiūnaitė R, Malys N, Maliene V (2015). "Close-range photogrammetry enables documentation of environment-induced deformation of architectural heritage". Environmental Engineering and Management Journal. 14 (6): 1371–1381. doi:10.30638/eemj.2015.149.

- ↑ Ina Jarve; Natalja Liba (2010). "The Effect of Various Principles of External Orientation on the Overall Triangulation Accuracy" (PDF). Technologijos Mokslai. Estonia (86): 59–64. Archived from the original (PDF) on 2016-04-22. Retrieved 2016-04-08.

- ↑ Sužiedelytė-Visockienė, Jūratė (1 March 2013). "Accuracy analysis of measuring close-range image points using manual and stereo modes". Geodesy and Cartography. 39 (1): 18–22. doi:10.3846/20296991.2013.786881.

- ↑ Baqersad, Javad; Carr, Jennifer; et al. (April 26, 2012). Dynamic characteristics of a wind turbine blade using 3D digital image correlation. Proceedings of SPIE. Vol. 8348.

- ↑ Lundstrom, Troy; Baqersad, Javad; Niezrecki, Christopher; Avitabile, Peter (1 January 2012). "Using High-Speed Stereophotogrammetry Techniques to Extract Shape Information from Wind Turbine/Rotor Operating Data". Topics in Modal Analysis II, Volume 6. Conference Proceedings of the Society for Experimental Mechanics Series. Springer, New York, NY. pp. 269–275. doi:10.1007/978-1-4614-2419-2_26. ISBN 978-1-4614-2418-5.

- ↑ Lundstrom, Troy; Baqersad, Javad; Niezrecki, Christopher (1 January 2013). "Using High-Speed Stereophotogrammetry to Collect Operating Data on a Robinson R44 Helicopter". Special Topics in Structural Dynamics, Volume 6. Conference Proceedings of the Society for Experimental Mechanics Series. Springer, New York, NY. pp. 401–410. doi:10.1007/978-1-4614-6546-1_44. ISBN 978-1-4614-6545-4.

- ↑ Robert-Houdin, Jean-Eugene (1885) _[Magie et Physique Amusante](https://archive.org/details/magieetphysique00hougoog/page/n167/mode/2up "iarchive:magieetphysique00hougoog/page/n167/mode/2up")._ Paris: Calmann Levy p. 112

- ↑ A. Sechin. Digital Photogrammetric Systems: Trends and Developments. GeoInformatics. #4, 2014, pp. 32-34 Archived 2016-04-21 at the Wayback Machine.

- ↑ Ahmadi, FF; Ebadi, H (2009). "An integrated photogrammetric and spatial database management system for producing fully structured data using aerial and remote sensing images". Sensors. 9 (4): 2320–33. Bibcode:2009Senso...9.2320A. doi:10.3390/s90402320. PMC 3348797. PMID 22574014.

- ↑ "How we used Photogrammetry to Capture Every Last Detail for Star Wars™ Battlefront™". 19 May 2015.

- ↑ "The real-time motion capture behind 'Hellblade'". engadget.com.

- 1 2 Petrie (1977: 50)

- ↑ Petrie (1977: 49)

- ↑ Robinson et al. (1977:10)

- ↑ Estes et al. (1977)

- ↑ Capper (1907)

- 1 2 3 Guy (1932)

- ↑ Bascom (1941)

- ↑ Schwartz (1964)

- ↑ Wiltshire (1967)

- ↑ Kriegler (1928)

- ↑ Hampl (1957)

- ↑ Whittlesey (1966)

- ↑ Fant and Loy (1972)

- ↑ Straffin (1971)

- ↑ Simpson and Cooke (1967)

- ↑ Hume (1969)

- ↑ Sterud, Eugene L.; Pratt, Peter P. (1975). "Archaeological Intra-Site Recording with Photography". Journal of Field Archaeology. 2 (1/2): 151. doi:10.2307/529625. ISSN 0093-4690.

- ↑ Craig (2000)

- ↑ Craig (2002)

- ↑ Craig and Aldenderfer (2003)

- ↑ Craig (2005)

- ↑ Craig et al. (2006)

- ↑ "Photogrammetry | Maritime Archaeology". 2019-01-19. Archived from the original on 2019-01-19. Retrieved 2019-01-19.

- ↑ MAKE:3D printing by Anna Kaziunas France

- ↑ Gopal Shah, Google Earth's Incredible 3D Imagery, Explained, 2017-04-18

- ↑ "Apple's RealityKit 2 allows developers to create 3D models for AR using iPhone photos". TechCrunch. 8 June 2021. Retrieved 2022-03-09.

- ↑ Espósito, Filipe (2021-06-09). "Hands-on: macOS 12 brings new 'Object Capture' API for creating 3D models using iPhone camera". 9to5Mac. Retrieved 2022-09-26.

Sources

- "Archaeological Photography", Antiquity, vol. 10, pp. 486–490, 1936

- Bascom, W. R. (1941), "Possible Applications of Kite Photography to Archaeology and Ethnology", Illinois State Academy of Science, Transactions, vol. 34, pp. 62–63

- Capper, J. E. (1907), "Photographs of Stonehenge as Seen from a War Balloon", Archaeologia, vol. 60, no. 2, pp. 571–572, doi:10.1017/s0261340900005208

- Craig, Nathan (2005), The Formation of Early Settled Villages and the Emergence of Leadership: A Test of Three Theoretical Models in the Rio Ilave, Lake Titicaca Basin, Southern Peru (PDF), Ph.D. Dissertation, University of California Santa Barbara, Bibcode:2005PhDT.......140C, archived from the original (PDF) on 23 July 2011, retrieved 9 February 2007

- Craig, Nathan (2002), "Recording Large-Scale Archaeological Excavations with GIS: Jiskairumoko--Near Peru's Lake Titicaca", ESRI ArcNews, vol. Spring, retrieved 9 February 2007

- Craig, Nathan (2000), "Real Time GIS Construction and Digital Data Recording of the Jiskairumoko, Excavation Perú", Society for American Archaeology Buletin, vol. 18, no. 1, archived from the original on 19 February 2007, retrieved 9 February 2007

- Craig, Nathan; Adenderfer, Mark (2003), "Preliminary Stages in the Development of a Real-Time Digital Data Recording System for Archaeological Excavation Using ArcView GIS 3.1" (PDF), Journal of GIS in Archaeology, vol. 1, pp. 1–22, retrieved 9 February 2007

- Craig, N.; Aldenderfer, M.; Moyes, H. (2006), "Multivariate Visualization and Analysis of Photomapped Artifact Scatters" (PDF), Journal of Archaeological Science, vol. 33, no. 11, pp. 1617–1627, Bibcode:2006JArSc..33.1617C, doi:10.1016/j.jas.2006.02.018, archived from the original (PDF) on 4 October 2007

- Estes, J. E.; Jensen, J. R.; Tinney, L. R. (1977), "The Use of Historical Photography for Mapping Archaeological Sites", Journal of Field Archaeology, vol. 4, no. 4, pp. 441–447, doi:10.1179/009346977791490104

- Fant, J. E. & Loy, W. G. (1972), "Surveying and Mapping", The Minnesota Messenia Expedition

- Guy, P. L. O. (1932), "Balloon Photography and Archaeological Excavation", Antiquity, vol. 6, pp. 148–155

- Hampl, F. (1957), "Archäologische Feldphotographie", Archaeologia Austriaca, vol. 22, pp. 54–64

- Hume, I. N. (1969), Historical Archaeology, New York

{{citation}}: CS1 maint: location missing publisher (link) - Kriegler, K. (1929), "Über Photographische Aufnahmen Prähistorischer Gräber", Mittheliungen der Anthropologischen Gesellschaft in Wien, vol. 58, pp. 113–116

- Petrie, G. (1977), "Orthophotomaps", Transactions of the Institute of British Geographers, Royal Geographical Society (with the Institute of British Geographers), Wiley, vol. 2, no. 1, pp. 49–70, doi:10.2307/622193, JSTOR 622193

- Robinson, A. H., Morrison, J. L. & Meuehrcke, P. C. (1977), "Cartography 1950-2000", Transactions of the Institute of British Geographers, Royal Geographical Society (with the Institute of British Geographers), Wiley, vol. 2, no. 1, pp. 3–18, doi:10.2307/622190, JSTOR 622190

{{citation}}: CS1 maint: multiple names: authors list (link) - Schwartz, G. T. (1964), "Stereoscopic Views Taken with an Ordinary Single Camera--A New Technique for Archaeologists", Archaeometry, vol. 7, pp. 36–42, doi:10.1111/j.1475-4754.1964.tb00592.x

- Simpson, D. D. A. & Booke, F. M. B. (1967), "Photogrammetric Planning at Grantully Perthshire", Antiquity, vol. 41, pp. 220–221

- Straffin, D. (1971), "A Device for Vertical Archaeological Photography", Plains Anthropologist, vol. 16, pp. 232–234

- Wiltshire, J. R. (1967), "A Pole for High Viewpoint Photography", Industrial Commercial Photography, pp. 53–56