In formal language theory, weak equivalence of two grammars means they generate the same set of strings, i.e. that the formal language they generate is the same. In compiler theory the notion is distinguished from strong (or structural) equivalence, which additionally means that the two parse trees are reasonably similar in that the same semantic interpretation can be assigned to both.[1]

Vijay-Shanker and Weir (1994)[2] demonstrates that Linear Indexed Grammars, Combinatory Categorial Grammars, Tree-adjoining Grammars, and Head Grammars are weakly equivalent formalisms, in that they all define the same string languages.

On the other hand, if two grammars generate the same set of derivation trees (or more generally, the same set of abstract syntactic objects), then the two grammars are strongly equivalent. Chomsky (1963)[3] introduces the notion of strong equivalence, and argues that only strong equivalence is relevant when comparing grammar formalisms. Kornai and Pullum (1990)[4] and Miller (1994)[5] offer a refined notion of strong equivalence that allows for isomorphic relationships between the syntactic analyses given by different formalisms. Yoshinaga, Miyao, and Tsujii (2002)[6] offers a proof that for any LTAG formalism, there is a strongly equivalent HPSG one.

Context-free grammar example

As an example, consider the following two context-free grammars,[note 1] given in Backus-Naur form:

<expression> ::= <expression> "+" <expression> | <expression> "-" <expression>

| <expression> "*" <expression> | <expression> "/" <expression>

| "x" | "y" | "z" | "1" | "2" | "3" | "(" <expression> ")"

<expression> ::= <term> | <expression> "+" <term> | <expression> "-" <term>

<term> ::= <factor> | <term> "*" <factor> | <term> "/" <factor>

<factor> ::= "x" | "y" | "z" | "1" | "2" | "3" | "(" <expression> ")"

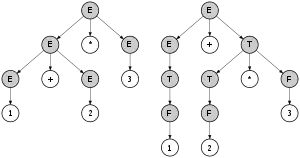

Both grammars generate the same set of strings, viz. the set of all arithmetical expressions that can be built from the variables "x", "y", "z", the constants "1", "2", "3", the operators "+", "-", "*", "/", and parentheses "(" and ")". However, a concrete syntax tree of the second grammar always reflects the usual order of operations, while a tree from the first grammar need not.

For the example string "1+2*3", the right part of the picture shows its unique parse tree with the second grammar;[note 2] evaluating this tree in postfix order will yield the proper value, 7. In contrast, the left picture part shows one of the parse trees for that string with the first grammar; evaluating it in postfix order will yield 9.

Since the second grammar cannot generate a tree corresponding to the left picture part, while the first grammar can, both grammars are not strongly equivalent.

Generative capacity

In linguistics, the weak generative capacity of a grammar is defined as the set of all strings generated by it,[note 3] while a grammar's strong generative capacity refers to the set of "structural descriptions"[note 4] generated by it.[7] As a consequence, two grammars are considered weakly equivalent if their weak generative capacities coincide; similar for strong equivalence. The notion of generative capacity was introduced by Noam Chomsky in 1963.[3][7]

Notes

- ↑ with the start symbol "<expression>"

- ↑ using the abbreviation "E", "T", and "F" for <expression>, <term>, and <factor>, respectively

- ↑ for context-free grammars: see Context-free grammar#Context-free language for a formal definition

- ↑ for context-free grammars: concrete syntax trees

References

- ↑ Stefano Crespi Reghizzi (2009). Formal Languages and Compilation. Springer. p. 57. ISBN 978-1-84882-049-4.

- ↑ Vijay-Shanker, K. and Weir, David J. (1994). "The Equivalence of Four Extensions of Context-Free Grammars". Mathematical Systems Theory. 27 (6): 511–546. doi:10.1007/BF01191624. S2CID 12336597.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - 1 2 Noam Chomsky (1963). "Formal properties of grammar". In R.D. Luce; R.R. Bush; E. Galanter (eds.). Handbook of Mathematical Psychology. Vol. II. New York: Wiley. pp. 323—418.

- ↑ Kornai, A. and Pullum, G. K. 1990. The X-bar Theory of Phrase Structure. Language, 66:24-50.

- ↑ Miller, Philip H. 1999. Strong Generative Capacity. CSLI publications.

- ↑ "Yoshinaga, N., Miyao Y., and Tsujii, J. 2002. A formal proof of strong equivalence for a grammar conversion from LTAG to HPSG-style. In the Proceedings of the TAG+6 Workshop:187-192. Venice, Italy" (PDF). Archived from the original (PDF) on 2011-08-28. Retrieved 2012-02-05.

- 1 2 Emmon Bach; Philip Miller (2003). "Generative Capacity" (PDF). In William J. Frawley (ed.). International Encyclopedia of Linguistics (2nd ed.). Oxford University Press. doi:10.1093/acref/9780195139778.001.0001. ISBN 9780195139778.