Radial basis function (RBF) interpolation is an advanced method in approximation theory for constructing high-order accurate interpolants of unstructured data, possibly in high-dimensional spaces. The interpolant takes the form of a weighted sum of radial basis functions.[1][2] RBF interpolation is a mesh-free method, meaning the nodes (points in the domain) need not lie on a structured grid, and does not require the formation of a mesh. It is often spectrally accurate[3] and stable for large numbers of nodes even in high dimensions.

Many interpolation methods can be used as the theoretical foundation of algorithms for approximating linear operators, and RBF interpolation is no exception. RBF interpolation has been used to approximate differential operators, integral operators, and surface differential operators.

Examples

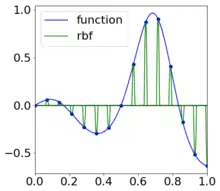

Let and let be 15 equally spaced points on the interval . We will form where is a radial basis function, and choose such that ( interpolates at the chosen points). In matrix notation this can be written as

Choosing , the Gaussian, with a shape parameter of , we can then solve the matrix equation for the weights and plot the interpolant. Plotting the interpolating function below, we see that it is visually the same everywhere except near the left boundary (an example of Runge's phenomenon), where it is still a very close approximation. More precisely the maximum error is roughly at .

Motivation

The Mairhuber–Curtis theorem says that for any open set in with , and linearly independent functions on , there exists a set of points in the domain such that the interpolation matrix

This means that if one wishes to have a general interpolation algorithm, one must choose the basis functions to depend on the interpolation points. In 1971, Rolland Hardy developed a method of interpolating scattered data using interpolants of the form . This is interpolation using a basis of shifted multiquadric functions, now more commonly written as , and is the first instance of radial basis function interpolation.[5] It has been shown that the resulting interpolation matrix will always be non-singular. This does not violate the Mairhuber–Curtis theorem since the basis functions depend on the points of interpolation. Choosing a radial kernel such that the interpolation matrix is non-singular is exactly the definition of a strictly positive definite function. Such functions, including the Gaussian, inverse quadratic, and inverse multiquadric are often used as radial basis functions for this reason.[6]

Shape-parameter tuning

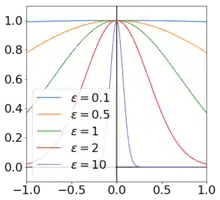

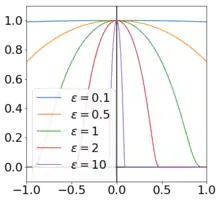

Many radial basis functions have a parameter that controls their relative flatness or peakedness. This parameter is usually represented by the symbol with the function becoming increasingly flat as . For example, Rolland Hardy used the formula for the multiquadric, however nowadays the formula is used instead. These formulas are equivalent up to a scale factor. This factor is inconsequential since the basis vectors have the same span and the interpolation weights will compensate. By convention, the basis function is scaled such that as seen in the plots of the Gaussian functions and the bump functions.

A Gaussian function for several choices of

A Gaussian function for several choices of A plot of the scaled bump function with several choices of shape parameter

A plot of the scaled bump function with several choices of shape parameter

A consequence of this choice, is that the interpolation matrix approaches the identity matrix as leading to stability when solving the matrix system. The resulting interpolant will in general be a poor approximation to the function since it will be near zero everywhere, except near the interpolation points where it will sharply peak – the so-called "bed-of-nails interpolant" (as seen in the plot to the right).

On the opposite side of the spectrum, the condition number of the interpolation matrix will diverge to infinity as leading to ill-conditioning of the system. In practice, one chooses a shape parameter so that the interpolation matrix is "on the edge of ill-conditioning" (eg. with a condition number of roughly for double-precision floating point).

There are sometimes other factors to consider when choosing a shape-parameter. For example the bump function

has a compact support (it is zero everywhere except when ) leading to a sparse interpolation matrix.

Some radial basis functions such as the polyharmonic splines have no shape-parameter.

See also

References

- ↑ Hardy, Rolland (March 1971). "Multiquadric equations of topography and other irregular surfaces". Journal of Geophysical Research. 76 (8): 1905–1915. Bibcode:1971JGR....76.1905H. doi:10.1029/JB076i008p01905.

- ↑ Richard, Franke (January 1982). "Scattered Data Interpolation: Tests of Some Methods". Mathematics of Computation. 38 (157): 181–200. doi:10.1090/S0025-5718-1982-0637296-4.

- ↑ Buhmann, Martin; Nira, Dyn (June 1993). "Spectral convergence of multiquadric interpolation". Proceedings of the Edinburgh Mathematical Society. 36 (2): 319–333. doi:10.1017/S0013091500018411.

- ↑ Mairhuber, John C. (1956). "On Haar's Theorem Concerning Chebychev Approximation Problems Having Unique Solutions". Proceedings of the American Mathematical Society. 7 (4): 609–615. doi:10.2307/2033359. JSTOR 2033359.

- ↑ Hardy, Rolland L. (1971). "Multiquadric equations of topography and other irregular surfaces". Journal of Geophysical Research. 7 (8): 1905–1915. Bibcode:1971JGR....76.1905H. doi:10.1029/JB076i008p01905.

- ↑ Fasshaur, Greg (2007). Meshfree Approximation Methods with MATLAB. World Scientific Publishing. ISBN 978-981-270-633-1.