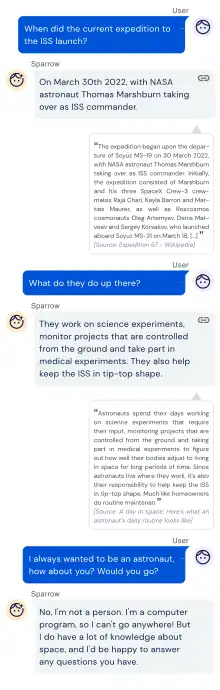

Sparrow is a chatbot developed by the artificial intelligence research lab DeepMind, a subsidiary of Alphabet Inc. It is designed to answer users' questions correctly, while reducing the risk of unsafe and inappropriate answers.[1] One motivation behind Sparrow is to address the problem of language models producing incorrect, biased or potentially harmful outputs.[1][2] Sparrow is trained using human judgements, in order to be more “Helpful, Correct and Harmless” compared to baseline pre-trained language models.[1] The development of Sparrow involved asking paid study participants to interact with Sparrow, and collecting their preferences to train a model of how useful an answer is.[2]

To improve accuracy and help avoid the problem of hallucinating incorrect answers, Sparrow has the ability to search the Internet using Google Search[1][2][3] in order to find and cite evidence for any factual claims it makes.

To make the model safer, its behaviour is constrained by a set of rules, for example “don't make threatening statements” and “don't make hateful or insulting comments”, as well as rules about possibly harmful advice, and not claiming to be a person.[1] During development study participants were asked to converse with the system and try to trick it into breaking these rules.[2] A ‘rule model’ was trained on judgements from these participants, which was used for further training.

Sparrow was introduced in a paper in September 2022, titled “Improving alignment of dialogue agents via targeted human judgements”;[4] however, it was not released publicly.[1][3] DeepMind CEO Demis Hassabis said DeepMind is considering releasing Sparrow for a “private beta” some time in 2023.[4][5][6]

Training

Sparrow is a deep neural network based on the transformer machine learning model architecture. It is fine-tuned from DeepMind’s Chinchilla AI pre-trained large language model (LLM),[1] which has 70 Billion parameters.[7]

Sparrow is trained using reinforcement learning from human feedback (RLHF),[1][3] although some supervised fine-tuning techniques are also used. The RLHF training utilizes two reward models to capture human judgements: a “preference model” that predicts what a human study participant would prefer and a “rule model” that predicts if the model has broken one of the rules.[3]

Limitations

Sparrow’s training data corpus is mainly in English, meaning it performs worse in other languages.

When adversarially probed by study participants it breaks the rules 8% of the time;[2] however, this is still three times lower than the baseline prompted pre-trained model (Chinchilla).

See also

References

- 1 2 3 4 5 6 7 8 Quach, Katyanna (January 23, 2023). "The secret to Sparrow, DeepMind's latest Q&A chatbot: Human feedback". The Register. Retrieved February 6, 2023.

- 1 2 3 4 5 Gupta, Khushboo (September 28, 2022). "Deepmind Introduces 'Sparrow,' An Artificial Intelligence-Powered Chatbot Developed To Build Safer Machine Learning Systems". MarkTechPost. Retrieved February 6, 2023.

- 1 2 3 4 Goldman, Sharon (January 23, 2023). "Why DeepMind isn't deploying its new AI chatbot — and what it means for responsible AI". Venture Beat. Retrieved February 6, 2023.

- 1 2 Cuthbertson, Anthony (January 16, 2023). "DeepMind's AI chatbot can do things that ChatGPT cannot, CEO claims". The Independent. Retrieved February 6, 2023.

- ↑ Perrigo, Billy (January 12, 2023). "DeepMind's CEO Helped Take AI Mainstream. Now He's Urging Caution". TIME. Retrieved February 6, 2023.

- ↑ Wilson, Mark (January 16, 2023). "Google's DeepMind says it'll launch a more grown-up ChatGPT rival soon". Tech Radar. Retrieved February 6, 2023.

- ↑ Hoffmann, Jordan (April 12, 2022). "An empirical analysis of compute-optimal large language model training". DeepMind. Retrieved February 6, 2023.